In 2006, the Evaluation Gap Working Group asked, “When will we ever learn?” This week, 3ie’s Drew Cameron, Anjini Mishra, and Annette Brown (hereafter CMB) have published a paper in the Journal of Development Effectiveness that uses data on more than thirty years of published impact evaluations from 3ie’s Impact Evaluation Repository (IER) to answer the question. We offer a few spoilers below, but if you’d like to read the full paper first, it is available open access here.

There is no denying the huge growth of interest in impact evaluation in the years since the Evaluation Gap Working Group called for more and better evidence of what works in international development. Indeed, the subsequent impact evaluation craze has led to substantial shifts in research priorities among large donors, governments and small NGOs (not to mention the formation of a number of important new organisations). Meanwhile, some like Lant Pritchett have mused that impact evaluation might just be a passing fad; though we sincerely hope that it has more staying power than the selfie stick or instagramming your food. Either way, there is no denying that the body of impact evaluation evidence is growing quickly. In the first four years of this decade, more impact evaluation evidence was published than in the preceding thirty!

A number of people have begun to examine emerging trends within this new evidence base. Eva Vivalt writes about the generalisability of impact evaluations in a number of sectors (here), Ruth Levine and Bill Savedoff look at the importance of impact evaluation for building collective knowledge for policymaking (here), the World Bank provides an overview of the relevance and effectiveness of impact evaluations (here), and David Evan’s recent trip to the annual conference at the Center for the Study of African Economies highlights that even more work is in the pipeline (here).

In early 2013, CMB began an effort to collect all impact evaluations of interventions in low-and middle-income countries (L&MICs) using a systematic search and screening process akin to those typically found in systematic reviews (see their search and screening protocol). The result of that effort is the 3ie IER, launched in 2014. Thanks to these efforts, we now have the first relatively complete picture of how far we have come and where we might be headed. To date, the IER boasts over 2,600 impact evaluations from interventions in L&MICs.

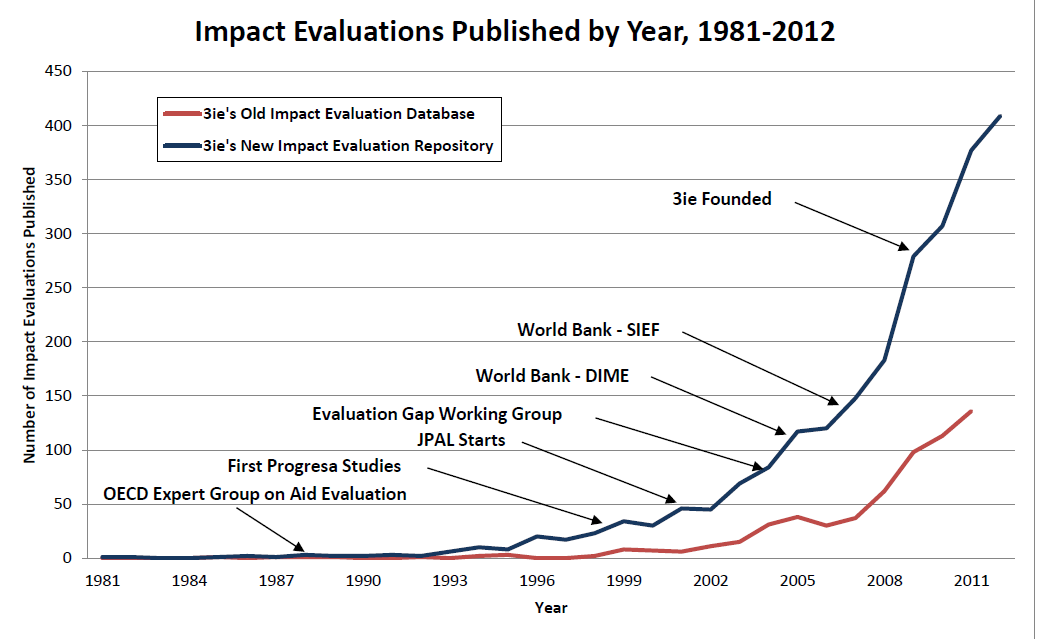

In 2013, using a trend line from indexed articles in 3ie’s old impact evaluation database, Bill Savedoff looked back on a few seminal events in the history of impact evaluation (here). His graphic showed a precipitous rise in the publication of impact evaluations over the previous five years. In the graphic below, we compare his old trend line (in red) to the IER’s more complete body of evidence (in blue). We now know that published evaluation evidence stretches back as far as 1981, and actually began to take off in the mid- to late-1990s, mostly in health. After 2000, social science publications gained a larger share of the total. Studies in all sectors increased dramatically after 2008. Evidence production still seems to be on the rise (though we’ll know more after this summer’s new round of search and screening).

We’ve learned a few other things as well. CMB find that most impact evaluations are focused on health, education, social protection, and/or agriculture. Randomised controlled trials dominate the literature in only a few sectors (health, education, information and communications technology, and water and sanitation services). Meanwhile, quasi-experimental methods are employed more frequently in agriculture and rural development, transportation, economic policy, and environment and disaster management.

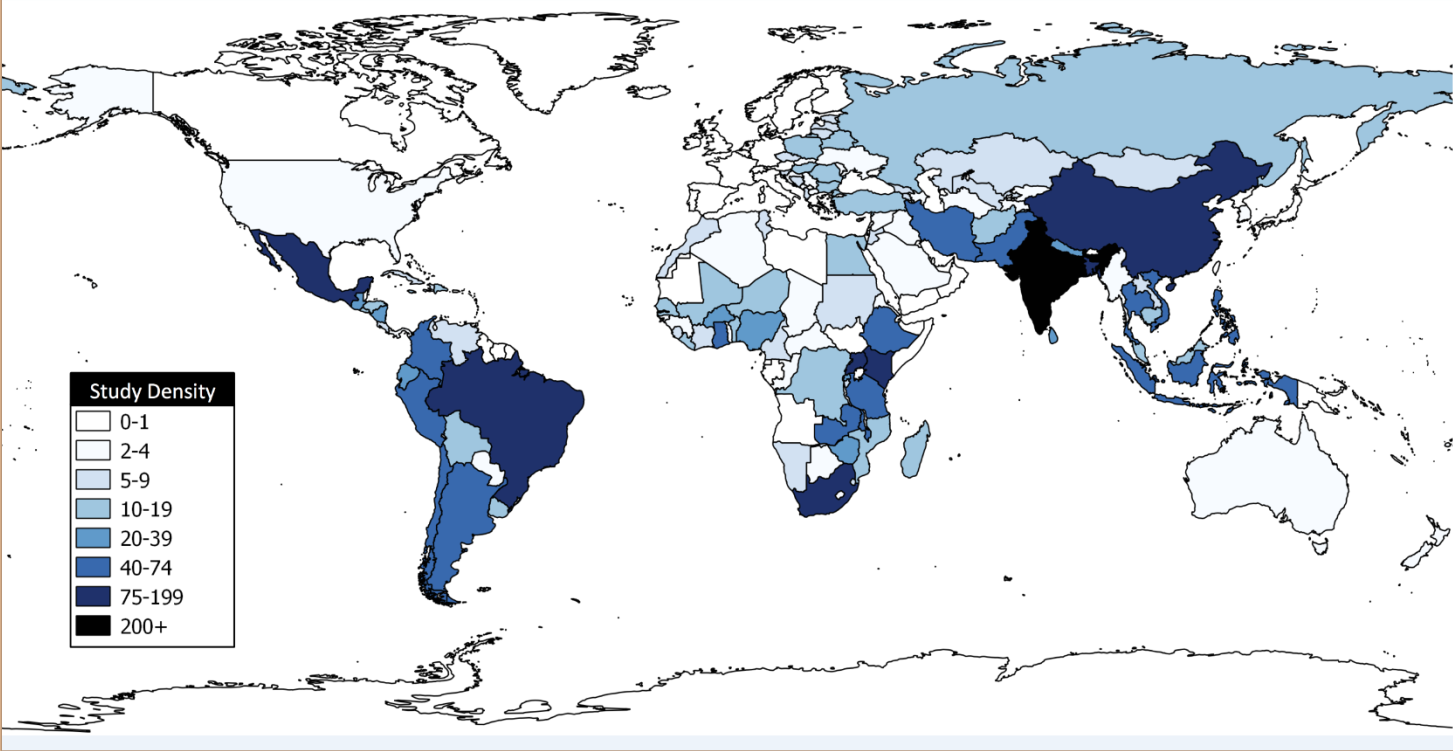

The following heat map shows that impact evaluations are concentrated mostly in South Asia, East Africa, South and Central America, and Southeast Asia. Meanwhile, notable gaps exist in other regions of the world like North, Central, and West Africa, and the Middle East. A very large share of studies (22.5 per cent) is also concentrated in just three countries: India, China and Mexico.

Impact evaluation heat map, studies published 1981-2012 (Cameron, Mishra, and Brown 2015)

CMB find a few more interesting (possibly disturbing) trends. Impact evaluations are published at a much slower rate (from end line data collection to publication) among journals in the social sciences (6.18 years) compared to those in the health sciences (3.75 years), from banks and international lending agencies (3.55 years), universities and research institutes (3.54 years), and government agencies (1.00 years). Further, CMB find that impact evaluation authorship is dominated by researchers with institutional affiliations in Western Europe and North America (not including Mexico) (49.7 per cent), and that this trend has only increased over the last 5 years.

In the coming months, we plan to revise our search and screening protocol to increase and improve search results, search for content published in additional languages, such as Spanish and Portuguese, and increase the number of databases and websites we search. We have also developed systems to update our records at a regular time each year and will be expanding the number of sectors and subsectors in which we index studies. We also hope to direct future efforts towards enhancing web capabilities and introducing data visualisation tools to enhance the usability of the IER. Open data and research transparency are also values we embrace at 3ie, which is why we are working to make the repository more accessible to the public by ultimately adopting best practices espoused by the Open Data Institute, Open Science Framework, and the Berkeley Initiative for Transparency in the Social Sciences.

The impact evaluation repository is an important public good. But in all this rush to make more and better evidence increasingly accessible to the international development community, we are eager for suggestions. Is there an area you think we should explore? A service you’d like to see available through the IER? Sectors you’d like to be added to our list? Please suggest them in the comments below or email us at database@3ieimpact.org.