Securing and building peaceful societies: Where is the evidence and where is it missing?

It has been estimated that over 1.8 billion people, close to a quarter of the world’s population, live in fragile contexts (OECD, 2018). Given the current Covid-19 pandemic, it is likely that this number might rapidly increase, with unequal access to health services, decent jobs, and safe housing leading to growing mistrust and social unrest. Interventions that tackle the underlying causes of conflict and fragility – along with immediate responses to the pandemic – are therefore more relevant than ever.

In an attempt to provide an easy-to-use overview of the evidence base of peacebuilding interventions, 3ie, in collaboration with the German Institute for Development Evaluation (DEval), developed the Building peaceful societies evidence gap map (EGM).

The evidence base is growing, but the studies are unevenly distributed among intervention types and outcomes. Almost a third of the available evidence is clustered around mental health and psychosocial support interventions (MHPSS), which have also been frequently analysed in systematic reviews. Beyond MHPSS, there are smaller clusters of studies on gender equality behaviour change communications interventions, community-driven development and reconstruction, and a rapidly increasing evidence base for cash transfer and subsidies programmes.

In contrast to the programming reality, in which interventions are often complex, most impact evaluations look at interventions that pursue a single approach to building peace. Additionally, we see only few applications of theory-based, mixed-methods approaches that would be best suited to address complexity. Studies that incorporate theory of change and mixed-methods approaches can help evaluators “open the black box” to understand why change may or may not be happening, and how the intervention has interacted with contextual factors, including barriers, facilitators and moderators.

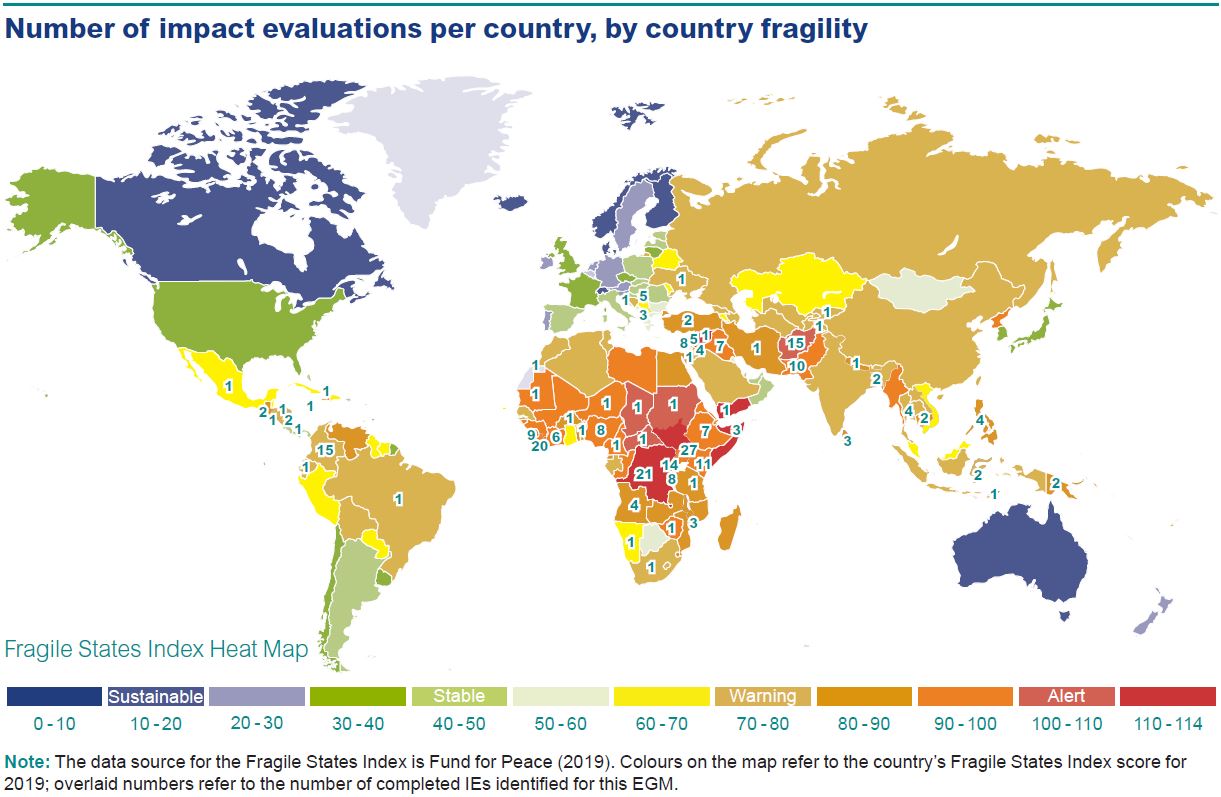

There are significant geographical evidence gaps, particularly, a lack of evidence for several countries that have both high-levels of fragility and development assistance. For instance, of the 10 most fragile countries according to the 2019 Fragile States Index, only the Democratic Republic of Congo and Afghanistan have a relatively substantial number of impact evaluations—15 and 12 respectively. Whereas for other fragile states that receive significant development assistance, there are very few studies or none at all. This includes countries like Syria, Yemen, Somalia, South Sudan, the Central African Republic, Chad, Sudan, Zimbabwe, Guinea and Haiti.

Although cost-effectiveness is an important question for programme funders and decision makers, very few studies report cost. Only five studies included some level of cost data. Three of these were studies of life skills and employment training. One MHPSS and one gender equality study also reported some cost evidence, while one ongoing study of a cash transfers and subsidies intervention committed to reporting cost evidence. This significantly limits the usefulness of the existing evidence base for decision makers.

An alarming number of studies don’t have ethics approvals even though the studies involve vulnerable populations. Despite widespread recognition of the importance of approaches such as ‘do no harm’ within the peacebuilding community, less than a third of the included impact evaluations reported having received ethical approval from an independent review board. Ethics approval is a core requirement of human-subjects research, and in particular when research is dealing with sensitive topics and vulnerable populations. It may be partially an issue of lack of reporting, but the issue is nevertheless concerning.

What is needed?

We identified 276 studies, including ongoing and completed impact evaluations (244) and systematic reviews (34) and the evidence base is still growing. To enhance the usefulness of future investments in research, we suggest researchers, implementers and funders consider the following steps to improve the evidence base and its value to decision makers:

- Coordinate new research to avoid duplication and further fragmentation of the evidence base.

- Commission more evaluations to address the significant geographic and substantive evidence gaps, including studies of complex, multi-component interventions.

- Agree on and adopt a common set of outcome measures across studies to enhance the potential for cross study lessons and evidence synthesis.

- Ensure new research incorporates gender and equity considerations and focus on key population groups of concern.

- Adopt study designs that are both rigorous and relevant: mixed-methods, theory-based approaches with cost-effectiveness calculations.

- Make funding conditional on researchers following guidelines and requirements for ethical research, including approval of study protocols and procedures by relevant review boards.

How can this EGM be used?

When designing programmes, policymakers and implementers can consult this EGM to find out whether rigorous evidence exists (including impact evaluations or systematic reviewss) for the programme in question, considering the following:

- Where relevant systematic reviews exist, consult the studies to inform programme design. Even low-quality reviews may present useful information, such as descriptions of the evidence base or theories of change, although effectiveness findings should be interpreted with caution;

- Where no evidence exists for an intervention, or none exists from the relevant geography, consider including an impact evaluation within the new programme, taking into account the implications for research noted above;

- Where there are existing evaluations but no recent or high-quality systematic reviews, consult the primary studies and take the findings into consideration for policy and programme design. We suggest you:

- Use caution when interpreting the findings. Conclusions regarding intervention effectiveness should not be drawn from single studies or by counting the number of ‘successful’ interventions. Further, all results may not be directly transferable to different contexts. Policymakers and practitioners should consult IEs as well as sector and regional specialists when judging the transferability of results; and

- If the cluster of evidence is large enough, consider commissioning a systematic review. Since these take time to develop, the EGM should be consulted as early as possible in the planning stages when designing new programmes or strategies.

We presented the results of this work in a webinar on you can see the recording here.

Add new comment