As we crossed the halfway point of the 2030 Agenda for Sustainable Development, we examined the state of the evidence for all 17 Sustainable Development Goals (SDGs). Joining forces with DEval* – and with funding from the German Federal Ministry for Economic Cooperation and Development (BMZ) – we did a deep dive into the effectiveness evidence in our Development Evidence Portal (DEP) to unpack the trends and themes across SDGs. Our report contains a series of dashboards summarizing the state of the evidence for each of the 17 SDGs between 1990 and 2022, and in this blog, we present five key takeaways about what this means for the sustainable development agenda.

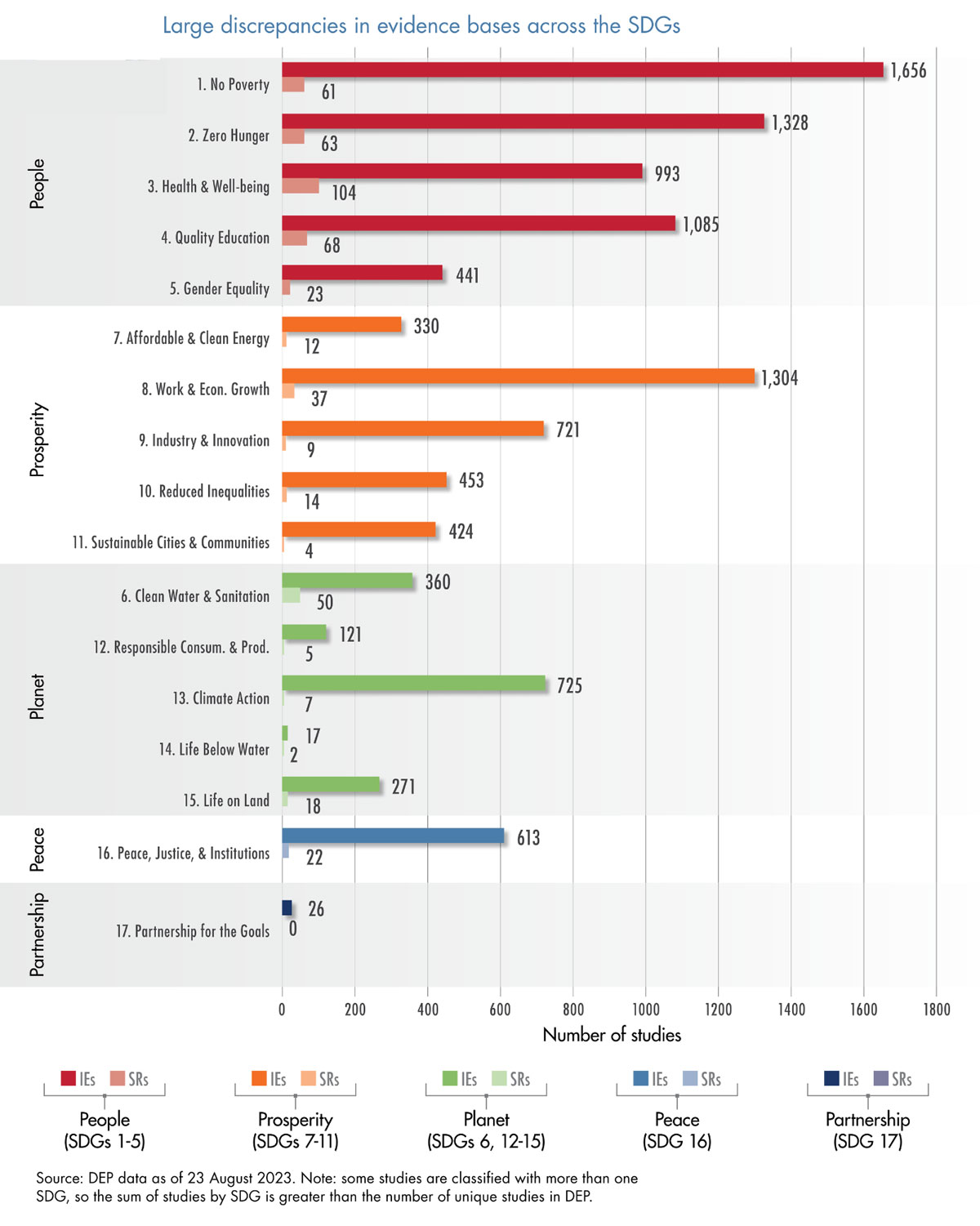

1. The thematic distribution of evidence is very lopsided—plenty of evidence for some SDGs and very little for others.

The availability of evidence across SDGs varies significantly. Whereas SDGs 1 (No Poverty), 2 (Zero Hunger), 4 (Quality Education), and 8 (Decent Work & Economic Growth) have abundant evidence with over 1,000 impact evaluations (IEs) each, SDGs 14 (Life Below Water) and 17 (Partnerships to Achieve the Goals) each have less than 30 IEs.

In terms of evidence on the five thematic categories – the “5 Ps” comprising People, Prosperity, Planet, Peace, and Partnerships – we find the “People” group (SDGs 1-5) is the most represented, with SDG 1 being evaluated in more than 1,500 IEs. Evidence for “Planet” (SDGs 6 and 12–15) and “Prosperity” (SDGs 7–11) thematic categories has grown rapidly since 2016 with particularly stark growth over the last three years in China. The “Peace” and “Partnership” groups remain under-evaluated.

In general, the DEP data does not allow us to say much about why some topics are studied more than others. But it is likely that some thematic categories – such as “People” – may be broader in terms of the scope of different interventions included. Some areas may be less represented in the evidence because they’re more difficult to evaluate – for example, because they are not easily amenable to randomization. The data available in the DEP can provide the basis for more detailed mapping and analysis.

Takeaway: When it comes to addressing imbalances, the wider evaluation community will need to up its game in terms of coordination and innovation. Making use of existing evidence more systematically, avoiding duplication of effort, and focusing scarce resources on critical gaps will require more transparency of planned, ongoing, and completed activities. Less expensive and invasive methods such as geospatial impact evaluation, as well as theory-based qualitative approaches to impact assessment such as process tracing, may help to close remaining gaps.

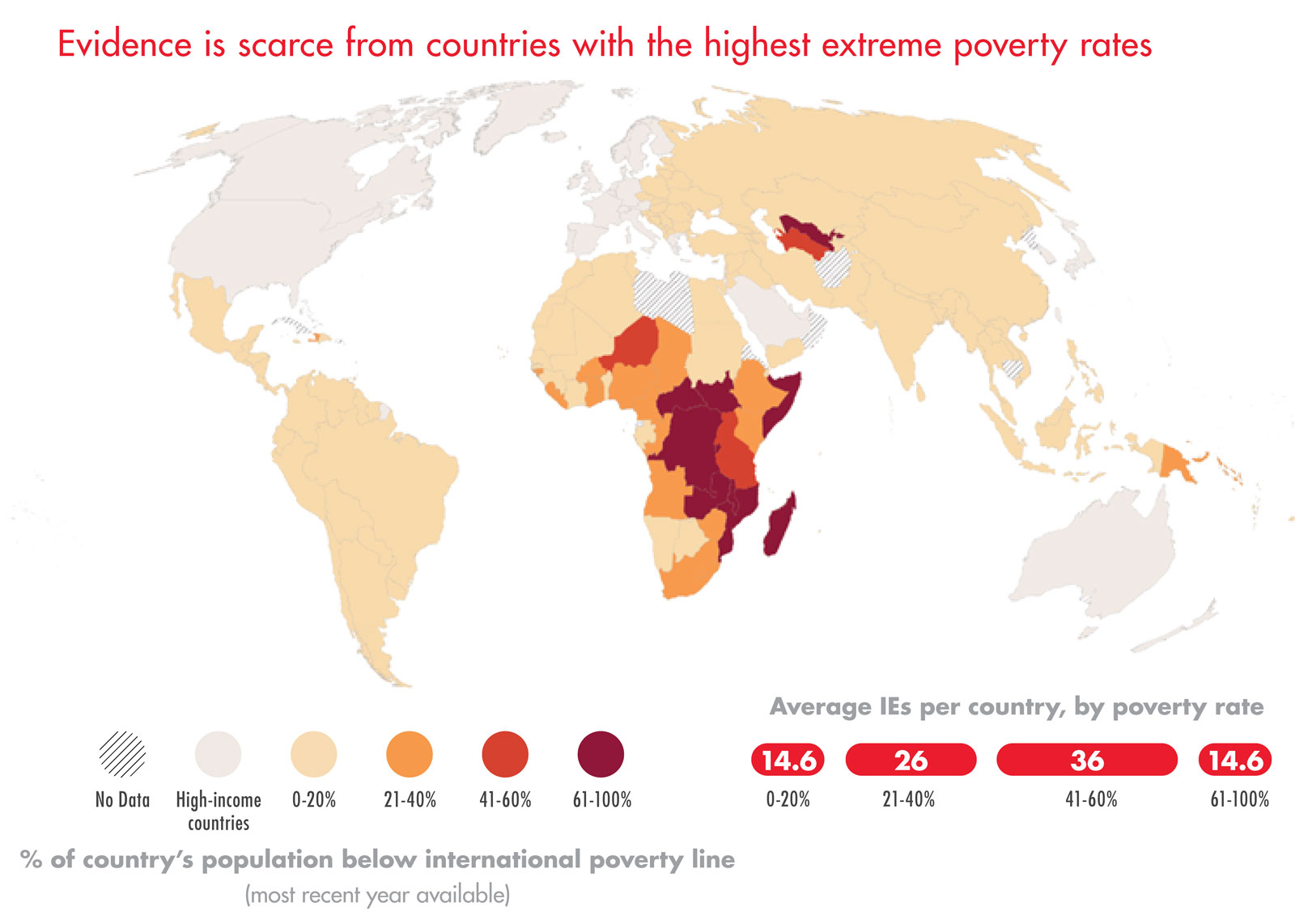

2. We often lack evidence where it is needed most.

We assessed the availability of evidence pertaining to specific SDGs and compared the volume of evidence with key country-level indicators related to those goals. We found a scarcity of evidence from nations dealing with the most extreme problems (although there often were substantial amounts of evidence in countries where these problems are moderately severe). This pattern implies a global shortage of evidence crucial for informed decision-making in contexts where evidence-based interventions are most needed to achieve the SDGs.

In fact, there are a number of countries in which evidence is generally lacking on any topic. These “evidence deserts” are mainly located in Central Africa and Central Asia.

Takeaway: Geographic imbalances in evidence distribution require targeted efforts in evaluation capacity development. Our findings should be a call to action for the Global Evaluation Initiative and other actors supporting evidence literacy, generation, and use. Also, development partners should consider piloting impact evaluations in under-researched countries. Again, in the most fragile countries, remote evaluation methods may constitute a reasonable first step.

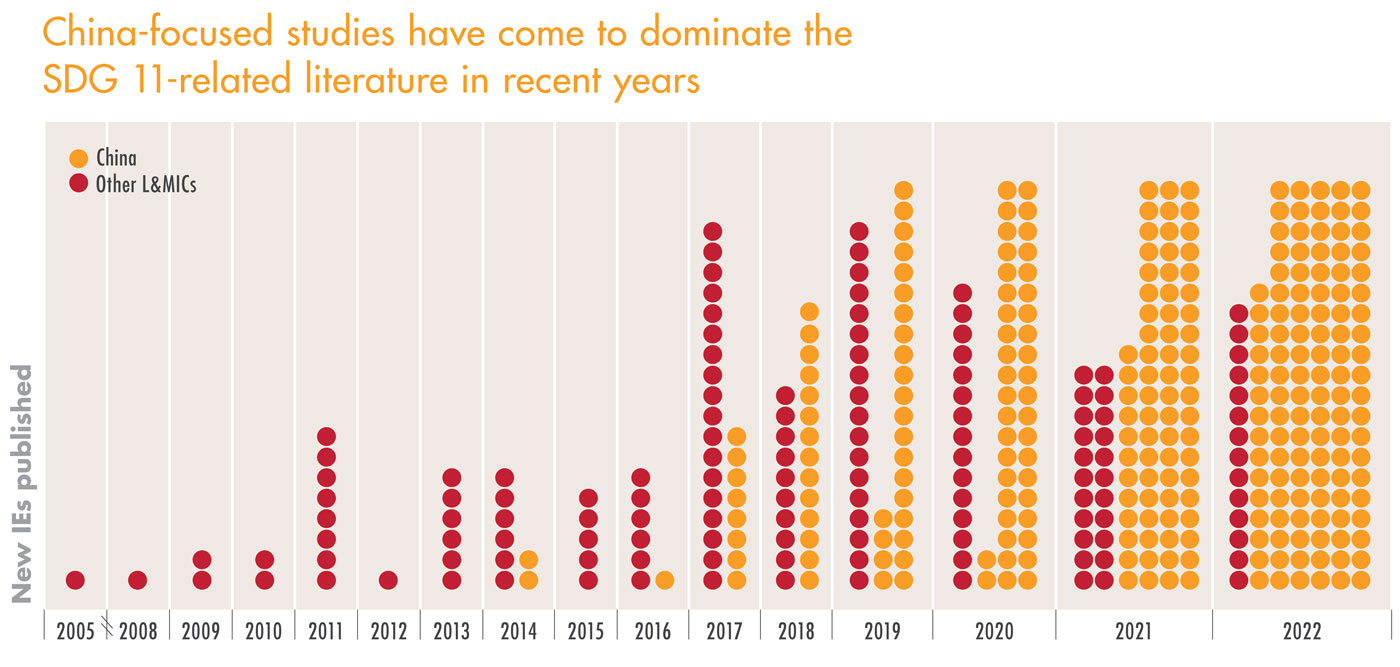

3. China is producing impact evaluations at an unprecedented rate but focused on a narrow range of topics.

There has been a recent surge in research output regarding SDGs 7 (Affordable and Clean Energy), 8 (Decent Work and Economic Growth), 11 (Sustainable Cities) and 13 (Climate Action). This increase is primarily due to a significant uptick in studies focusing on Chinese policies, conducted by researchers in China and funded by Chinese government agencies. Evaluations of interventions associated with these SDGs often utilize quasi-experimental methods, which are particularly suited for analysis with secondary datasets.

Takeaway: China is making a strong contribution to the global evidence base. This could encourage others to engage in collaborative, complementary, or competitive evidence generation. Science and evaluation can be great areas of collaboration between countries. Multi-country validation is much needed in many areas. Complementary priorities in thematic areas not yet much covered by China and others can be another strategy for countries to address their specific evidence needs. Finally, competition for the most interesting questions, best methods, and most refined analyses can increase quality across the board. We need to strengthen voices from diverse countries and institutions in an even more vibrant conversation on what works best in international development in the future.

4. There is a lack of reliable syntheses for most SDGs

A handful of SDGs boast extensive pools of systematic reviews that provide reliable findings. However, even these often cover a limited range of interventions. Most reviews do not meet the highest methodological standards.

Takeaway: Given the range of interventions, contexts, and, in particular the rate at which new studies emerge, conducting individual systematic reviews and updating them after a few years seems hardly sustainable. Experimenting with “living” reviews for key areas which are regularly updated, is one promising way to approach this challenge. Artificial intelligence has the potential to support evaluation synthesis and increase the efficiency of screening as well as continuous coverage over time. At the same time, it is not a panacea and expert coding of findings will remain an important part of evidence synthesis for the foreseeable future. Building capacities for evaluation synthesis globally will be critical to ensure an inclusive use of evidence in the future.

5. Gender and equity considerations receive limited attention in evaluation research.

A minority of studies undertake measures such as subgroup analysis to assess whether interventions benefit vulnerable groups or integrate measures of inequality into their outcomes. For all Sustainable Development Goals except SDG 5 (Gender Equality), over 65 percent of studies do not consider gender or equity in their research design.

Takeaway: Funders should prioritize integrating gender and equity considerations into study designs. This can be achieved by actively engaging local researchers who possess the contextual knowledge required to address these dimensions effectively. By insisting on the inclusion of gender and equity considerations, funders not only promote more comprehensive and socially responsible research but also empower local communities by amplifying their voices and perspectives. This approach enhances the relevance and impact of research outcomes, contributing to fostering greater equity and inclusivity in the evaluation landscape.

Read the full report.

*DEval: German Institute for Development Evaluation